Ein sehr lesenswerter Essay für zwischendurch.

Category: Uncategorized

Is cloud gaming ecological?

I’ve recently stumbled upon a couple of new companys like Onlive or Gaikai (demo) whose primary business model is to stream video games hosted in huge server farms (the “clouds”) over broadband networks to everyones low-powered home computer. And this business model makes me think, not only if I remind myself that today’s video platforms like YouTube already take a huge piece of the global bandwidth usage (somebody once calculated this for youtube last year before they started the high quality video steaming and estimated they stream about 126 Petabytes a month).

No, it also makes me think about ecological issues. Let us compare the possible energy consumption between a “traditional” gamer and a (possible) future online gamer who is using one of these services. I won’t and can’t give you detailed numbers here, but you can probably get an idea where I am heading if you once read Saul Griffith’s game plan – its all about getting the full picture of things.

Let’s start out with the traditional gamer, who has a stationary PC or Laptop with a built-in 3D graphics card, processor and sound system. If he plays video games all his components are very busy: The CPU is calculating the AI and game logic, the graphics card is processing the pixel and vertex shaders rendering billions of pixels, vertexes and polygons every second into a digital image stream, which is then sent to the user’s monitor at the highest possible frame rate. A sound system outputs the game’s voices, music and sound effects with the help of the computer’s built-in sound card. As I said I can’t give you a final number here, every setup is a little different to the other, but you can probably get an idea how much power is used even for an average gamer setup – several hundreds of watt.

How does the online gamer compare to that? Well, the first look is good. The only things this gamer’s computer has to process here are video and sound, and the video actually only has to be decoded from a regular encoded digital format. Most PCs even with a lower GHz rate will be able to accomplish this task. The sound will be, by today’s standards, probably only simple stereo, so no need for a custom sound processor or big sound setup either. I’d guess the usual consumption for this setup would be less than one hundred watts. Sounds great? Maybe, but maybe not.

The thing is that the video signal itself has to be generated first – on a high-end machine or “cloud” of computers. This means that the needed graphics and CPU power consumption is moved from the “client” – the gamer’s PC – to a “server” component – it did not simply vanish. There is not a single computer involved which consumes energy to let the user play, but maybe a huge ball park. And the parts of the ball park which process the game’s contents need extra power. I don’t know how much, but I bet it won’t be little.

Ok, server farms might be better suited for these kind of tasks, you might say, because virtualizing these computing-intensive tasks would mean you could use serveral server instances in parallel and therefor also use their power consumption more ecological… But wait, this is not a simple web server idling most of the time which gets virtualized here, we’re speaking of game virtualization. Remember how the single users PC was under full load while computing the game’s contents? And, how much can the program code of a game which is used to run on a single PC really be virtualized and parallalized? Does every of these online gaming clients needs dedicated hardware in the end…?

Now, lets assume the services managed to work around these problems somehow smartly – the online gamer’s power consumption footprint of course raised already because we learned that his video signal needed to be created somewhere else first which might have costed a lot of power. But we’re still not there – the signal is still in the “cloud” – and its huge! Uncompressed video in true color even with a – by todays standards – lower resolution of 1024 by 768 pixels takes for a smooth experience 75 Megabytes per second! Hell, If I get a 1 MB/s download rate today I’m already happy…

So, of course the video signal needs to be compressed. While the later decompression is not as costly, the compression, especially for real-time video, is and it takes lots of processing power and a very good codec like H.264. Special, dedicated hardware might do this task faster than an average Joe’s PC hardware components, but this hardware still needs extra power which we need to consider.

Are we done with the online gamer? Well, almost, the video signal is created, compressed and ready for transport, but it hasn’t yet been transported. We need the good old internet for this and send the constant huge stream of packets over dozens of hops and myriads of cables to him. Every extra hardware which is needed for this extra network load again needs hundreds, if not thousands of watts. Of course not exclusive, but the partial bandwidth and power consumption of these components is surely different if you browse a website, listen to online radio or stream a full-screen video.

As I said multiple times, I can’t give you any detailed numbers, but I really, really have the bad feeling that the whole idea of game virtualization is just a big, fat waste of resources – especially energy.

Home

A must-see for every human – especially for those from the western world. No strings attached, the movie speaks for itself.

15 billion chickens and counting

We all know how hard it is to communicate dry statistics to somebody and I guess its not me alone who has a hard time to grasp relations between big numbers (finance crisis anyone?) – but this nice little widget is just fantastic. It amazes me every time how much creativity people have and create wonderful, entertaining and in this case even educating things. Based on multiple officially available statistics the guys from poodwaddle.com created a world clock which shows the growth and decline rates of almost everything. Some of them are more accurate than others, while almost all of them display interpolated values, of course. Its still a big joy to watch these things and some numbers really get you thinking…

Thomas says…

Simply the best and most uniqe game since… well, since a long, long time. Its worth the 1500 WiiPoints or 20 Bucks for the PC / Mac / Linux version, really. Trust me. Only this one time.

reCaptcha

I’ve added a captcha plugin to this site (reCaptcha) to fight the huge amount of random URL comment spam which is unfortunately not captured by my askismet installation – sorry for the inconvenience.

At least if you type in the captcha correctly your comment should be instantly visible by now.

280slides

My boss Thomas came home from San Francisco today where he attended the annual WWDC, Apple’s Worldwide Developers Conference, and he brought some interesting infos and stuff along (Snow Leopard Alpha install party, anyone?)

I haven’t had the chance to have a longer chat with him yet, but one thing he was totally awesome about was when he attended in this indie developer track the other day. I forgot what he told me it was entitled, but basically it was about a young startup company which “just” created a Powerpoint / iWork Keynote equivalent which completly runs inside your browser… you find it here:

Back yet?

Have you noticed the overall responsive UI? Have you seen the semi-transparent tool windows with their soft drop shadows? Have you tried to insert a shape via drag’n’drop and changed its size and form? And of course you noticed how the little preview pages on the left instantly updated when you changed somewhat in your main slide, haven’t you?

As a normal Joe you’d probably say “hey, decent application, very nice!” – as a developer of any kind you should by now just simply blown away…

A bit of explanation follows.

What you’ve just seen was a completly new web framework user interface built on top of a completly new web programming language named Objective-J built on top of plain, old Javascript 1.x. One of the founders of 280 North, Francisco Ryan Tolmasky, apparently loved his Objective-C for desktop development of Mac OS X applications so much, that he decided to create a version for any browser (the underlying Javascript can be found here for the interested).

Ok, now, nice, somebody invented a new script language on top of Javascript – now what? Shouldn’t this be painfully slow? What’s the point?

As you’ve seen it is not at all slow, I guess with Firefox 3 and newer versions of Safari / Webkit it should get even faster. And the point behind this is that if the foundation stands as-is, its just a matter of time to reproduce all the core functionalities of the Cocoa Frameworks – those programming libraries which are already used for all these fancy Mac OS X applications (not to forget the iPhone / iPod touch applications and the native Windows versions of iTunes and Safari). What if you could write a Objective-C application in the future – and with minimal changes to its rendering source code – just publish it as a web application running in everyone’s browser?!

Wow, now this is very cool…

Of course there are similar efforts of creating “rich internet applications”, most of them need some kind of browser plugin or runtime (Flash, Adobe Air, Silverlight, to name a few) and some are install-free (basically everything what Google has created, like Docs and Spreadsheets, the calendar app, GMail, …). While widgets on the former usually look very decent and maybe even adapt to your local style setting, pure web-based javascript apps often look like outlaws – or at least do not provide all the widgets you’re used to if you’re developing desktop applications. With whatever drives 280slides, we might see the best of both worlds – widgets which look and feel like the widgets in desktop applications and widgets which are completly install-free.

The guy(s) behind 280North promised to release Objective-J (and hopefully the Frameworks on top of it) soon under objective-j.org and I’m very very excited to see more of it.

One last anecdote Thomas told me today – Francisco Ryan Tolmasky was asked during the presentation of 280slides what he did before he founded his company, i.e. he was asked for his background. Imagine a young guy standing in front of the audience, probably aged under 25, answering “Well, I’ve worked for Apple on the iPhone version 1, but after that had been finished, I decided to leave the company. What was left for me there anyways, doing it again for iPhone 2 was not appealing after all…”

Oh my god.

Compromise a coffee machine

Just found via heise: The Jura F90 Coffee machine’s connectivity kit which “enables [the communication] with the Internet, via a PC [to] download parameters to configure [the] espresso machine to your own personal taste” seems to have some security problems:

The connectivity kit uses the connectivity of the PC it is running on to connect the coffee machine to the internet. This allows a remote coffee machine “engineer” to diagnose any problems and to remotely do a preliminary service.

Best yet, the software allows a remote attacker to gain access to the Windows XP system it is running on at the level of the user.

(Source)

So next time your coffee is too strong or too weak – look out for nearby hackers!

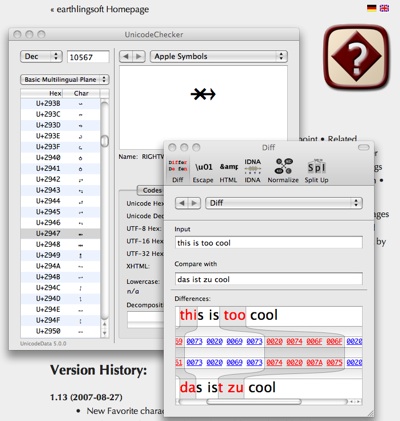

Unicode Character Tool

I’ve been looking for a Mac OS X equivalent of KDE’s kcharselect tonight and before I noticed that there is something similar already built in (the character map which is available from the internationalization menu), I stumbled upon UnicodeChecker:

And wow, this is a very fine application which goes even beyond the options the built-in solution provides. For me the following things were particularily useful:

- Browse the whole Unicode range by character blocks, either sorted by codepoints or by definition

- Built-in search for character names (Spotlight indexing possible) – say, you need a character / glyph to display a triangle, just search for “triangle” in Spotlight and it opens up in UnicodeChecker!

- Bookmarks and History of recently shown characters / codepoints

- Conversion from/to HTML / IDNA / Javascript / CSS UTF-8/16/32 encodings – very useful if you ever stumbled across problems like how to encode a unicode string for a javascript alert() box properly

- Splitting up unicode sequences – “Why are my textbreaks broken? Oh – must have been this non-breaking unicode space…”

- And last but not least: a very clean interface.

So while dealing with encodings is probably not the most sexiest thing on the planet, this application surely makes it fun to browse the Unicode range.

And if you still think you don’t need this application, just check out one of the other applications the authors, Steffen Kamp and Sven-S. Porst, have created – controlling iTunes by giving your Mac notebook a slap sounds interesting as well, doesn’t it?

Nine Inch Nails go Creative Commons

People who regularily visit Digg.com probably already know it: Nine Inch Nails – one of my favourite bands – just released their new full-length album “Ghosts I-IV” with 36 tracks under the Creative Commons Attribution Non-Commercial Share Alike license! You can download the album from their official site in various editions, amongst whose is also a completly free download version. The paid versions range from $5 for the download-only version (encoded in 320kbps mp3, lossless FLAC or some lossless Apple codec) including a PDF booklet to a $300 “Ultra-Deluxe Limited Edition Package” – everything 100% DRM-free. Now isn’t that cool?!

But it gets even better:

Now that we’re no longer constrained by a record label, we’ve decided to personally upload Ghosts I, the first of the four volumes, to various torrent sites, because we believe BitTorrent is a revolutionary digital distribution method, and we believe in finding ways to utilize new technologies instead of fighting them.

(Source: The Pirate Bay)

Trent uploaded the first part of the album on Pirate Bay himself! Now take your hat of to this great man and support him! I’ve just ordered the $10 CD version and am now in the process of downloading the album…