If you’re having more than one computer where you look regularily for your emails (f.e. at home, at work and while you’re on the way) and you get a reasonable amount of (non-spam) emails every day, you probably know the problem: Client-side email filters just don’t do it.

Being a novice with all the mail software stuff my initial simple idea was “hey, lets look for a web-based procmailrc frontend” – but all I found didn’t really catch it. So I looked a bit further and stumbled across the sieve mail filtering language (RFC). Here is an example sieve file (taken from libsieve-php, a PHP Sieve library):

require [“fileinto”];

if header :is “Sender” “owner-ietf-mta-filters@imc.org”

{

fileinto “filter”; # move to “filter” mailbox

}

elsif address \:DOMAIN :is [“From”, “To”] “example.com”

{

keep; # keep in “In” mailbox

}

elsif anyof (NOT address :all :contains

[“To”, “Cc”, “Bcc”] “me@example.com”,

header :matches “subject”

[“*make*money*fast*”, “*university*dipl*mas*”])

{

fileinto “spam”; # move to “spam” mailbox

}

else

{

fileinto “personal”;

}

To let this work you need to setup an LDA (Local Delivery Agent) for your MTA (Mail Transfer Agent, such as Exim) which puts incoming emails into the local user’s mailboxes. This LDA reads in the Sieve script (which f.e. resides in the user’s home directory) and evaluates the expressions to figure out where it should go.

Now while the script itself is already easier to read and understand than a cryptic procmail script, it’s far from being perfect:

* Non-technical people will still have a hard time to write these rules

* Users need physical (i.e. ftp / shell) access to the mail server to edit the script, this is especially problematic if your email users are virtual

The solution: ManageSieve

ManageSieve is a protocol specification which is relatively new and still pretty much in flux, but gains support pretty quickly. It especially targets problem number two, i.e. it allows the management of sieve scripts without giving a user shell access to the machine. ManageSieve clients authenticate via the IMAP login credentials and run their commands against a dedicated server port (usually 2000).

KMail already supports the ManageSieve protocol since KDE 3.5.9 and there is a Thunderbird plugin in the work. KMail was not an option, being on Mac and while Thunderbird is my main email client, its not the client of my girl (which uses Apple’s Mail). Even if I’d have been able to persuade her using Thunderbird again, it would have been a no-go area for her anyways: The Thunderbird plugin has no nice end user interface as of now, but merely comes across as a managed script editor (though a “real” UI is planned).

So I was very happy to find out that somebody at least wrote a ManageSieve plugin for my webmail client of choice, roundcube.

The setup: Dovecot’s ManageSieve server + Dovecot’s Sieve plugin for deliver + Roundcube’s managesieve patch

Since the ManageSieve standard is not yet completed, implementations tend to differ. The roundcube managesieve implementation was built around and only tested with Dovecot, a popular POP3/IMAP server, so my initial setup (the Exim/Courier IMAP tandem) didn’t fit. I quickly read dovecot’s docs with respect to Exim integration and decided to give the Courier replacement a try since it seemed well supported. This was supposed to be the easiest part, `sudo apt-get remove courier-imapd && sudo apt-get install dovecot-imap`, until I noticed that the installed dovecot version in Hardy (1.0.10) did not include the needed sieve patches, so I had to compile and patch everything myself (again, since the ManageSieve specification is not yet finished, its not part of the main dovecot distribution, either). Luckily, the exact workflow – downloading and patching Dovecot, downloading managesieve, installing and configuring everything – is documented here.

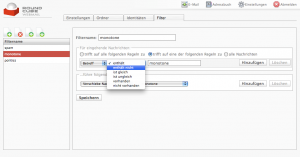

The final missing piece now was roundcube. Downloading and applying the patch was a no-brainer and worked out as expected – after patching a new “Filters” menu popped up in roundcube’s settings view:

Fine, until my first test showed that the rules weren’t applied. So I checked back into my server – everything seemed to be in place:

$ ls -lh .dovecot.sieve* sieve

lrwxrwxrwx 1 me me 21 2008-11-16 12:09 .dovecot.sieve -> sieve/roundcube.sieve

-rw——- 1 me me 560 2008-11-16 12:16 .dovecot.sievec

sieve:

total 16K

-rwx—— 1 me me 459 2008-11-16 12:09 roundcube.sieve

drwx—— 2 me me 4,0K 2008-11-16 12:09 tmp

(The ManageSieve specification allows to activate and deactivate multiple existing sieve scripts, dovecot’s implementation does this by symlinking to the correct one from .dovecot.sieve into sieve/<scriptname>. The .dovecot.sievec is the compiled, i.e. syntax checked version of the script, another implementation detail.)

And yes, my rules editing from within roundcube found their way into the file:

$ cat sieve/roundcube.sieve

require [“fileinto”];

# rule:[spam]

if anyof (header :contains “Subject” “*****SPAM*****”)

{

fileinto “Trash”;

}

[…]

Looking into the logfile of `deliver` (dovecots LDA) shed light into the darkness:

deliver(me): 2008-11-16 02:20:28 Info: msgid=: save failed to Trash: Unknown namespace

deliver(me): 2008-11-16 02:20:28 Info: sieve runtime error: Fileinto: Generic Error

deliver(me): 2008-11-16 02:20:28 Error: sieve_execute_bytecode(/home/me/.dovecot.sievec) failed

Since dovecot 1.1 `deliver` respects the IMAP `prefix` setting in dovecot.conf, which I had to set during my courier-imap -> dovecot transition. This basically “virtually” adds a string prefix like “INBOX.” or something else to all mailbox names. (The actual use case is to have distinct “public” and “private” IMAP folders with namespaces, but I don’t use that.)

A simple example: If your Maildir folder structure looks like this on harddisk

cur

new

tmp

.Foo

.Foo.Bar

than this means that your mail client actually gets reported this structure on IMAP’s LIST command:

INBOX

INBOX.Foo

INBOX.Bar

This was actually what was reported to roundcube as well, but roundcube’s IMAP code removes the INBOX.-prefix for some reason, thus reporting the ManageSieve plugin the wrong mailbox path, “Trash” instead of “INBOX.Trash”.

After diving a bit through roundcube’s PHP code I could fix the issue with the rather ugly usage of a meant-to-be private function of roundcube’s IMAP API (patch is available here for the interested), but wohoo, now finally everything works as expected!

And again, a weekend is gone. The outcome? I can filter emails server-side and – I wrote this blog. I feel its hell about time to do some more substantial things again…

Update: If you managed to set up server-side filtering and wonder why your favourite Mail reader Thunderbird does not show you new emails in various IMAP target folders even though you’ve subscribed to them, ensure you’ve set the preference “mail.check_all_imap_folders_for_new” to “true“ (source).